Increasing Threat of DEEP Fake Technology “Think before You Believe”

Science and technology are constantly advancing. Deep fakes, along with automated content creation and modification techniques, merely represent the latest mechanisms developed to alter or create visual, audio, and text content. The term “deep fakes” is derived from the fact that the technology involved in creating that particular style of manipulated content involves the use of deep learning techniques. A subset of the general category of “synthetic media/synthetic content.”

The threat of Deep fakes and synthetic media comes not from the technology used to create it, but from people’s natural inclination to believe what they see, and as a result, deep fakes and synthetic media do not need to be particularly advanced or believable in order to be effective in spreading mis/disinformation. AI-generated text is another type of deep fake that is a growing challenge. Whereas researchers have identified a number of weaknesses in image, video, and audio deep fakes as means of detecting them, deep fake text is not so easy to detect. It`s not out of the question that a user’s texting style, which can often be informal, could be replicated using deep fake technology. Cheapfake is another version of synthetic media in which simple digital techniques are applied to content to alter the observer’s perception of an event.

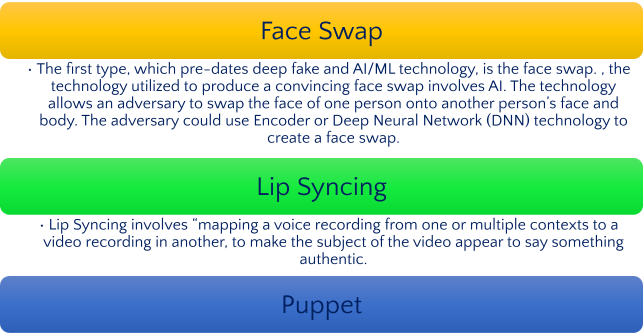

“One major harmful use of face swap technology is deep fake pornography”

The final deep fake technique represented on the timeline is referred to as the ‘puppet’. This technique allows the user to make the targeted individual move in ways they did not actually move. This can include facial movements or whole-body movements. Puppet deep fakes use Generative Adversarial Network (GAN) technology that consists of computer-based graphics. (GANs) A key technology leveraged to produce deep fakes and other synthetic media is the concept of a “Generative Adversarial Network” or GAN. In a GAN, two machine-learning networks are utilized to develop synthetic content through an adversarial process. The first network is the “generator” and the second network (“adversary”) attempts to detect flaws in the presented examples and rejects those that it determines do not exhibit the same sort of characteristics as the original data – identifying them as “fakes.”

In 2021, Fire Eye reported cyber actors used GANs-generated images on social media platforms to promote Lebanese political parties. Multiple influence campaigns conducted by cyber actors associated with nation-states used GANs-generated images targeting localized and regional issues. In October 2020, researchers reported over 100,000 computer-generated fake nude images of women created without their consent or knowledge, according to Sensity AI, a firm that specializes in deep fake content and detection. The creators used an ecosystem of bots on the messaging platform Telegram to facilitate sharing, trading, and selling services associated with deep fake content. Sensity AI found that approximately 90-95% of deep fake videos since 2018 were primarily based on non-consensual pornography.

The deep fake threat go in the future?

Deep fakes continue to pose a threat to individuals and industries, including potential large-scale impacts to nations, governments, businesses, and society, such as social media disinformation campaigns operated at scale by well-funded nations. A renewed adherence to security protocols such as 2-factor authentication and device-based authentication constitutes an elemental first step in this process. Furthermore, it would be advantageous to pursue a strategy of investment in strengthening our democratic and media institutions and new-fangled and emerging technology. Blockchain authentication is one such stand-out possibility, as it holds great potential to standardize and promote verification and authenticity.

DEEPFAKE SCENARIO EXAMPLES

- National security & law enforcement: inciting violence

- Producing false evidence about Climate change

- Deep fake Kidnapping

- Corporate Sabotage and Election Influence

- Corporate-enhanced social engineering attacks

- Cyberbullying

As we can conclude that the Deep fakes/ synthetic media, and disinformation in general pose challenges to our society. They can impact individuals and institutions from small businesses to nations. As discussed above, there are some approaches which may help mitigate these challenges, and undoubtedly other approaches we have yet to identify. It`s time for there to be a coordinated, collaborative approach.